Row by Row example by the numbers

There are two main ways to perform a traditional Extraction, Load and Transformation (ETL). The first way is a row by row examination of the source and the then a row by row process into the destination. The other way is to process the source information in bulk and then load in bulk. Both methods have their pros and cons and is typically problem specific.

This example will be a step by step walk through of a row by row extraction, load and transform (ETL).

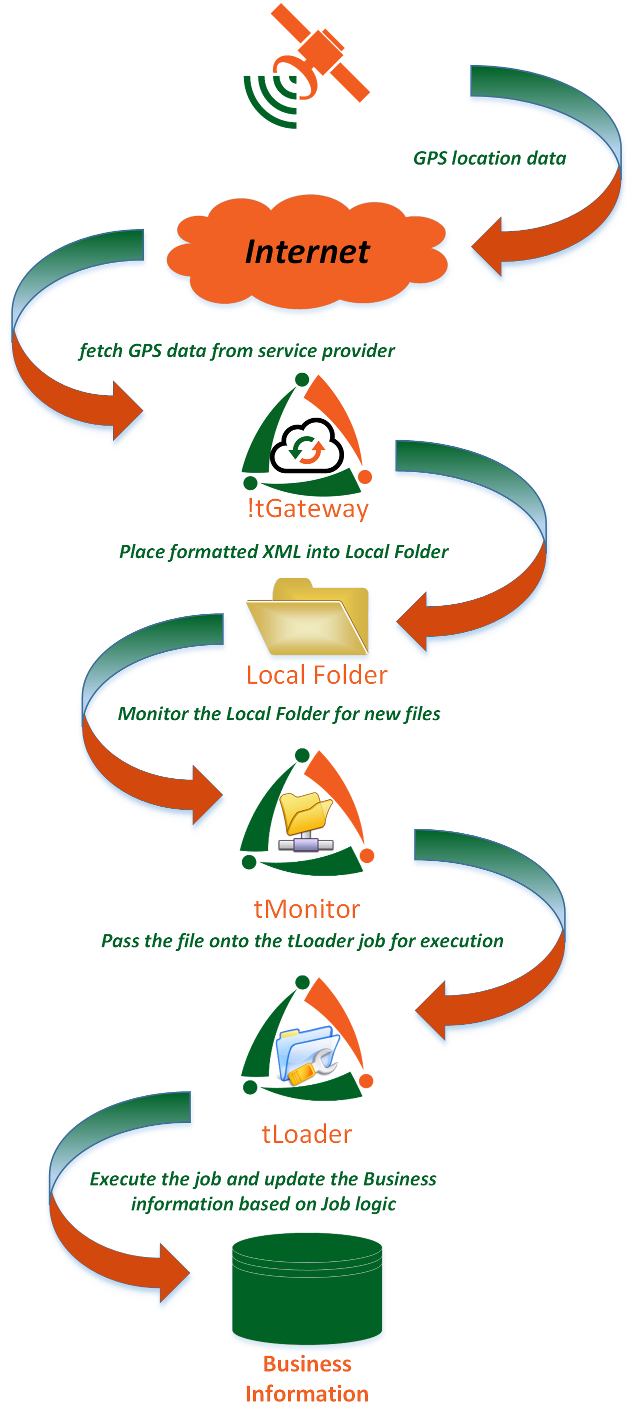

Overview

The example below will read an XML file that was created by !Tgateway. The data is GPS breadcrumb data from a web service. !Tgateway pulls the data from the web service, transforms it into workable XML and then places the result into a local folder. This is considered the source table. !Tsuite considers XML as raw table data which is a powerful way of dealing with XML data.

The Flow

- GPS tracking devices collect GPS locations

- !Tgateway reads data from a web service over the Internet

- !Tgateway coverts the web service call document into a workable XML file and places it in a local folder

- tMonitor then notices the new file and forwards the text file to the tLoader job that has been configured to consume the XML data

- tLoader then executes the business logic to determine if the XML row data is to discarded, updated, inserted or unconditionally processed

- Business information is dealt with using the tLoader definition

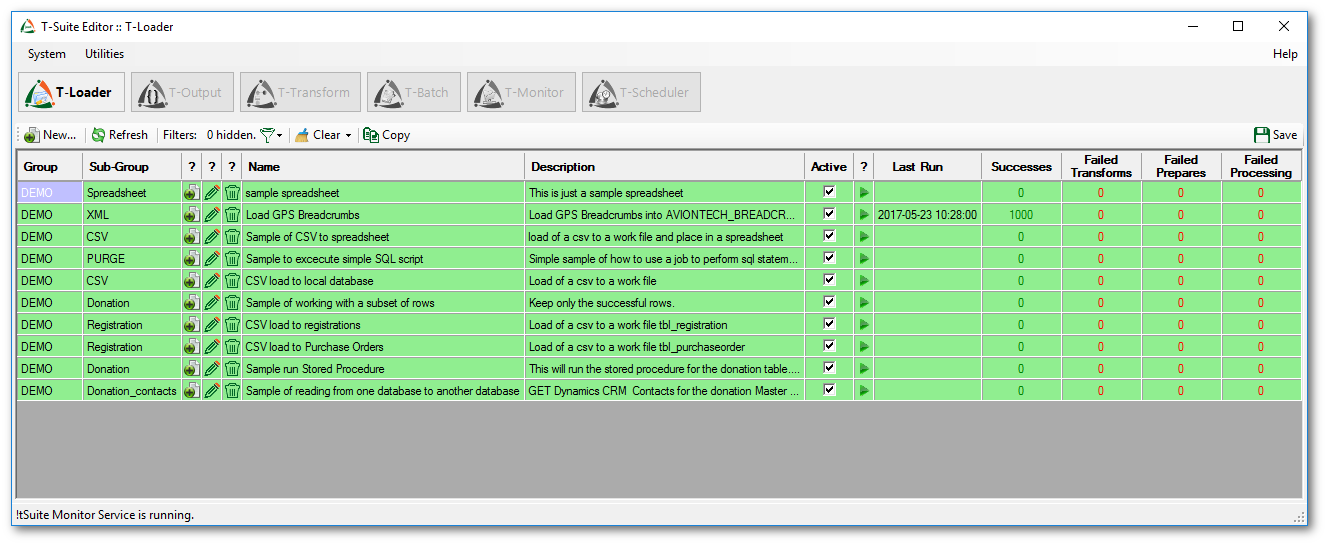

!tSuite main page

The tab we are most interested for this example is T-Loader. The actual job for this example is “Load GPS Breadcrumbs”. Click on the edit pencil to view the job steps.

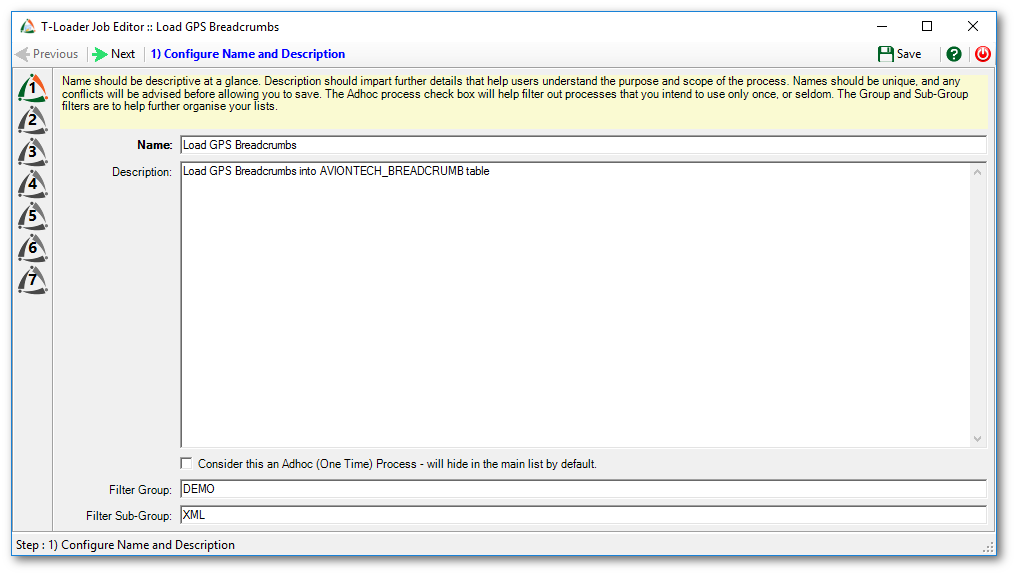

Step 1: Configure Name and Description

This step allows you to name the job, describe the job and finally provide a group and sub group filter.

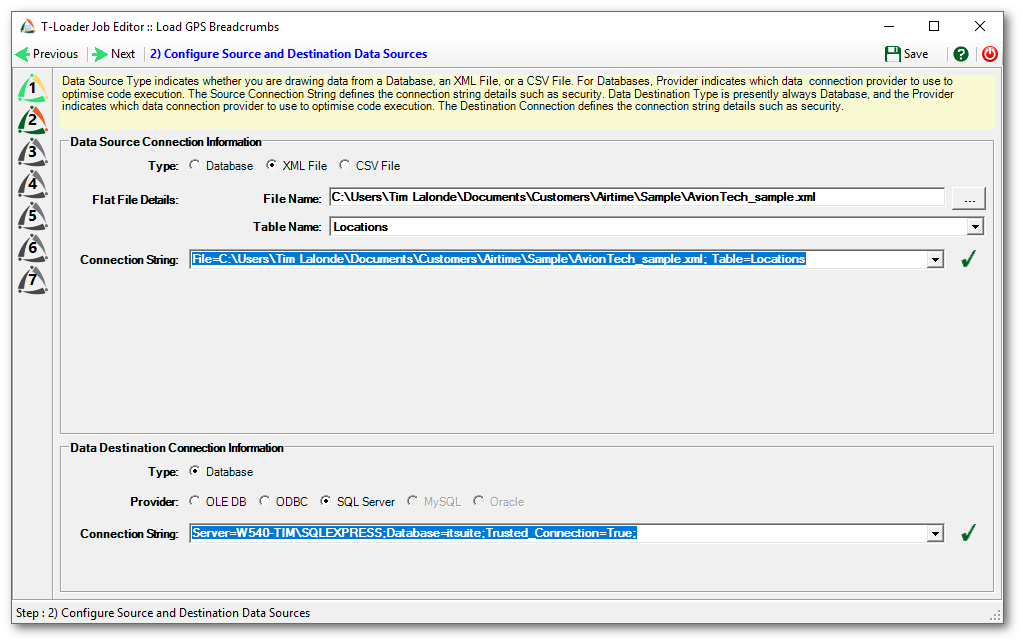

Step 2: Configure Source and Destination Data Sources

The top half of the screen is used to describe the source definition and the bottom of the screen is used to define the ultimate destination.

For our example the source is an XML file. Select the radio button for XML file. Clicking the … button will allow you to browse for a file location. Once a file has been selected the table name will be populated with the name of Parent row tags from the XML. Finally the connection string will be automatically created by the values entered in the file name and table name entry boxes.

The destination for this job is a Native SQL server instance with the connection information as listed in the connection string input area.

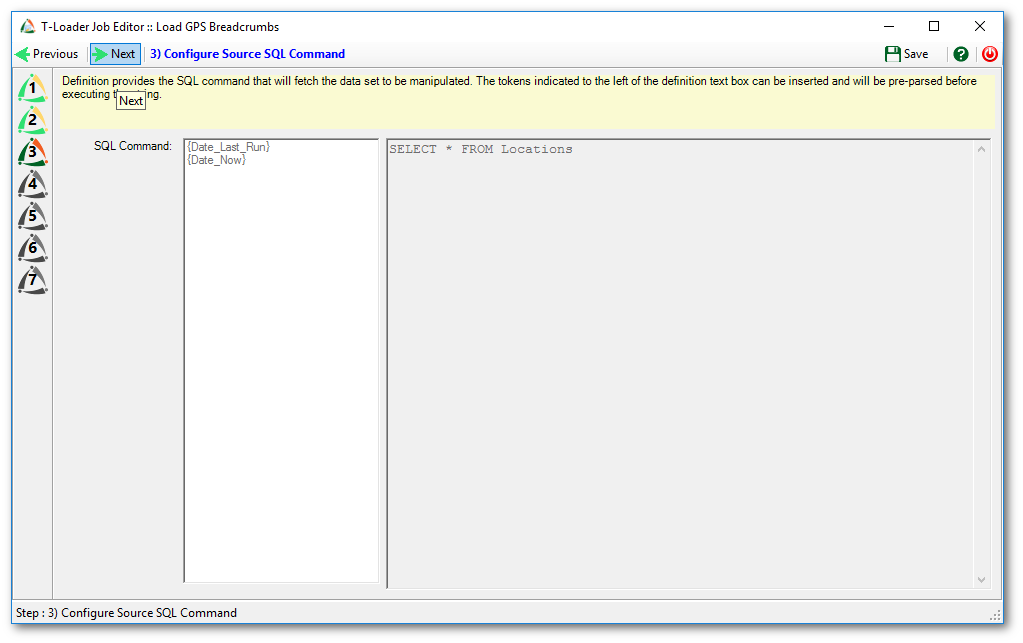

Step 3: Configure Source SQL Command

For our example Step 3 is auto populated and it cannot be altered. If the source was a database then this area would be a full features SQL select statement to return all the rows to be processed. Any valid SQL select statement can be used. However our example does NOT require this area to be filled in.

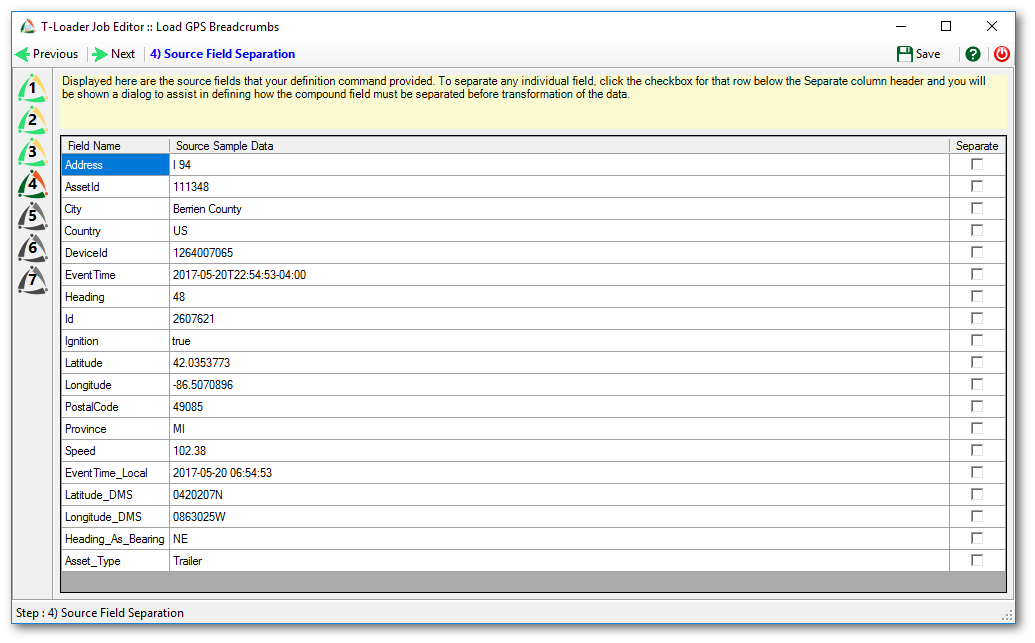

Step 4: Source Field Separation

This step allow you to further bread down a field into sub components. This rarely used but very power feature is an excellent way to deal with those data sources that have more than one field value in a single field definition. This is very common in dealing with legacy macro use for on board computing systems. In our example there is no need to have field separation. You will notice that you get a field definition for all tags in the table definition for “Location”. Now you are able to deal with very specific controls based on what the XML file has sent you via the table definition.

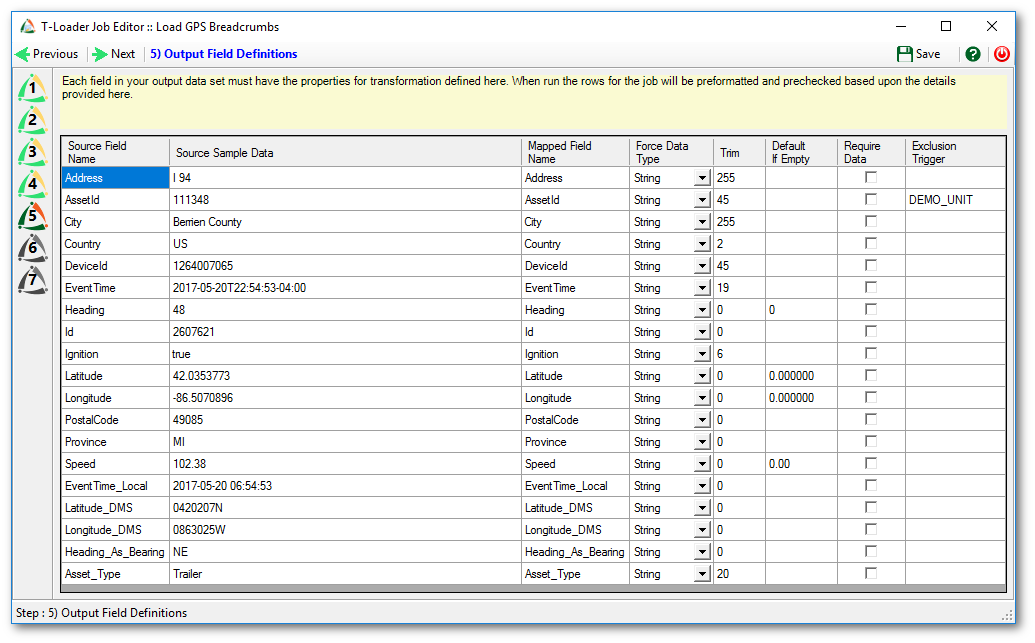

Step 5: Output Field Definitions

This step is a very useful step for anyone tasks with dealing with unlike systems. Much of the coding effort can be removed by simple entries on this screen.

In the example above some techniques where used to make the downstream work more readable, reliable and flexible.

The exclusion trigger for AssetID with a value of DEMO_UNIT. What this will do is if AssetID is read that equals DEMO_UNIT the whole row will be ignored. A nice trick to quickly get rid of consistent data from your destination system with having to code for it with SQL.

The trim column will automatically trim the source field to match a destination requirement. Again, a very fast way of getting rid of complex sql statements downstream. This work is none in memory and very fast. Most SQL engines pay a heaving price for character trim functions. This in memory work is one way of gaining performance.

Default if empty. By filling in what you want as a default when NO source value is presented is a nice clean way to get around ugly downstream sql statements like isnull and/or coalesce. By leveraging in memory processing the data can be transformed without having to burden the source or destination sql engines.

Require data if checked will ensure that NO null values are sent. If a NULL value is sent, an error is trapped and sent the tWatcher function for review of the row in question.

Force data type will allow you to convert source types to destination types. Use the drop down list for these in memory conversions. The goal of these functions is to leverage in memory processing outside of the SQL engines.

Mapped Field Name can be used to rename the source field definitions to make the sql statements easier to read and to deal with. Most users will use this area to get rid of unwanted spaces or to make legacy column names more human readable.

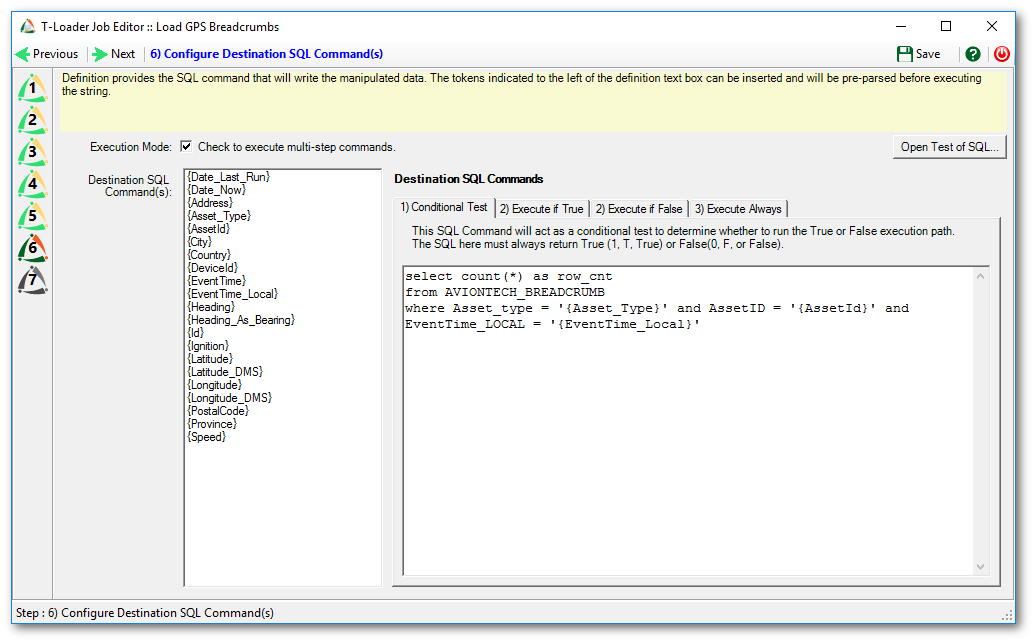

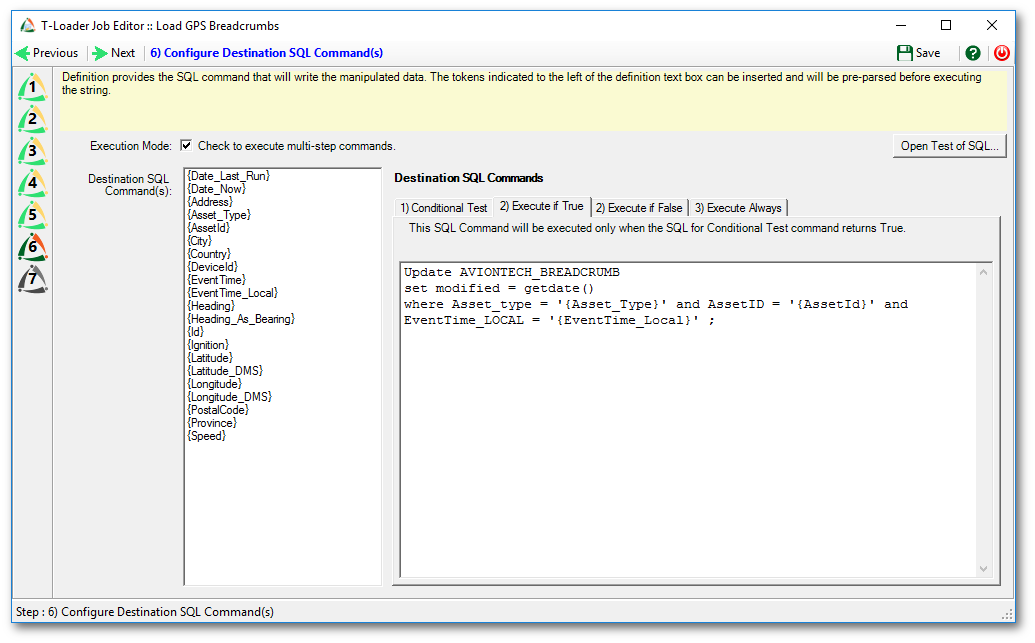

Step 6: Configure Destination SQL command(s)

For our example w are going to click “Execution Mode” to “Check to execute multi-Step commands.

1) Conditional Test

This example is testing to see if a row already exists for the specific asset at the specific time with the specific type. If at least one row returns with that condition the true condition will be executed. If a value of 0 is returned the false condition will be executed.

2) Execute if True

If a row is returned from the test condition, the SQL statement will be executed. In our example the modified data of the breadcrumb table will be set to the current system date and time.

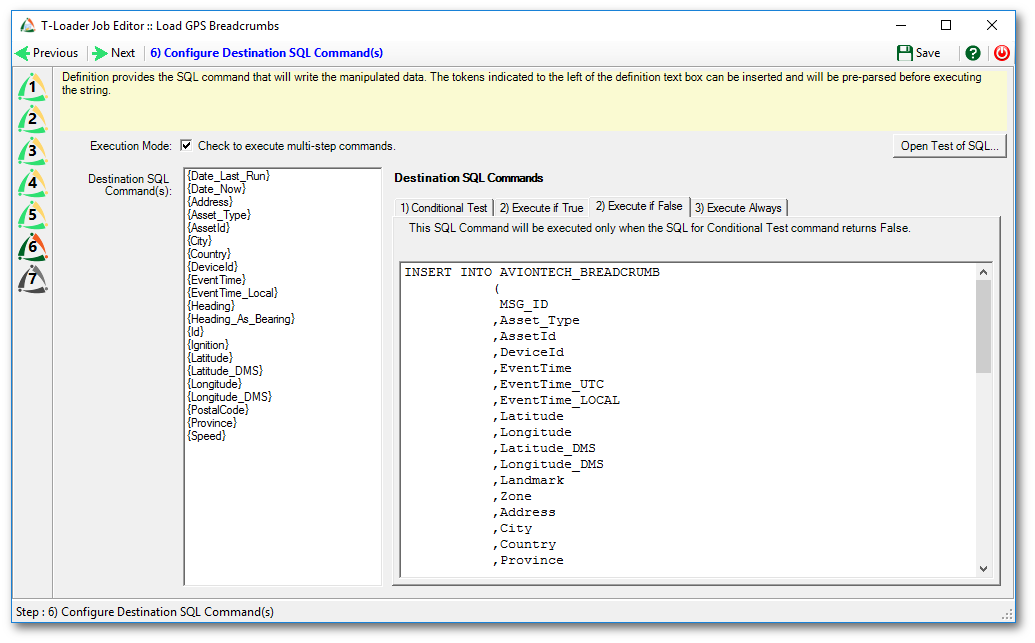

2) Execute if False

If a zero or false condition is returned from the test condition, this sql statement will be executed. In this example an insert will be executed against the breadcrumb table of the destination sql server database.

!Tsuite replaces everything between the {} exactly. You can get quite fancy with leveraging this reality of the tool when it comes to character field concatenations. Refer to the Landmark column below.

INSERT INTO AVIONTECH_BREADCRUMB ( MSG_ID ,Asset_Type ,AssetId ,DeviceId ,EventTime ,EventTime_UTC ,EventTime_LOCAL ,Latitude ,Longitude ,Latitude_DMS ,Longitude_DMS ,Landmark ,Zone ,Address ,City ,Country ,Province ,PostalCode ,Speed_mph ,Speed_kph ,Heading ,Heading_As_Bearing ,Ignition ,created_by ,created ,modified) VALUES ( '{Id}' ,'{Asset_Type}' ,'{AssetId}' ,'{DeviceId}' ,'{EventTime}' ,replace('{EventTime}','T',' ') ,'{EventTime_Local}' ,{Latitude} ,{Longitude} ,'{Latitude_DMS}' ,'{Longitude_DMS}' ,LEFT('{Address}, {City}, {Province}, {Country}, {PostalCode} ',255) ,NULL ,'{Address}' ,'{City}' ,'{Country}' ,'{Province}' ,'{PostalCode}' ,cast(({Speed} * 0.621371) as decimal(5,2)) ,{Speed} ,'{Heading}' ,'{Heading_As_Bearing}' ,'{Ignition}' ,'TSuite' ,getdate() ,getdate() )

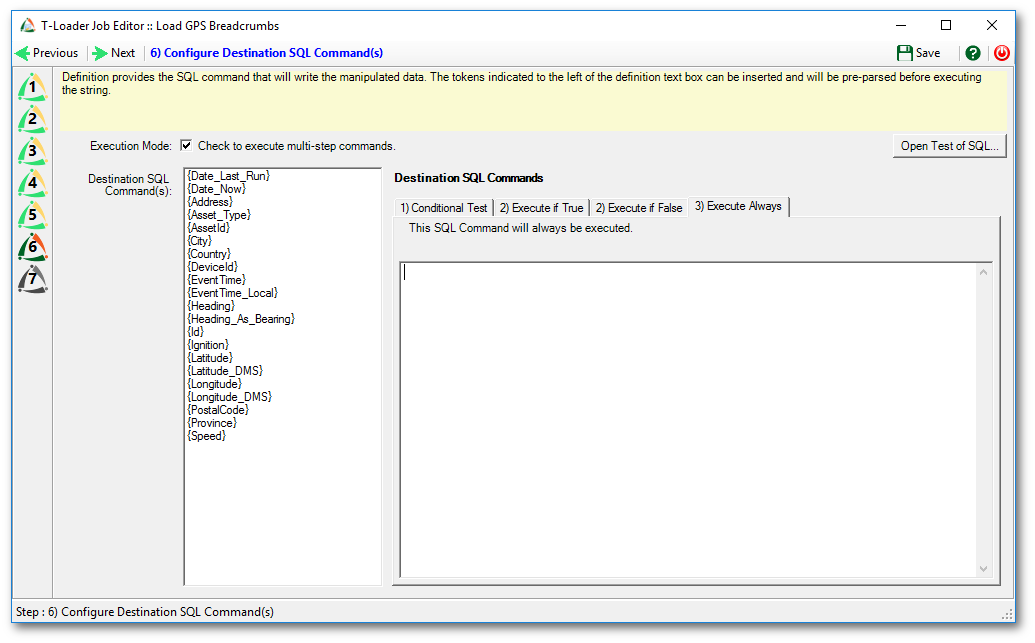

3) Execute Always

Our example does NOT require the use of an execute always sql statement. This is very useful if you want to write a log record of all rows read regardless of true or false condition logic.

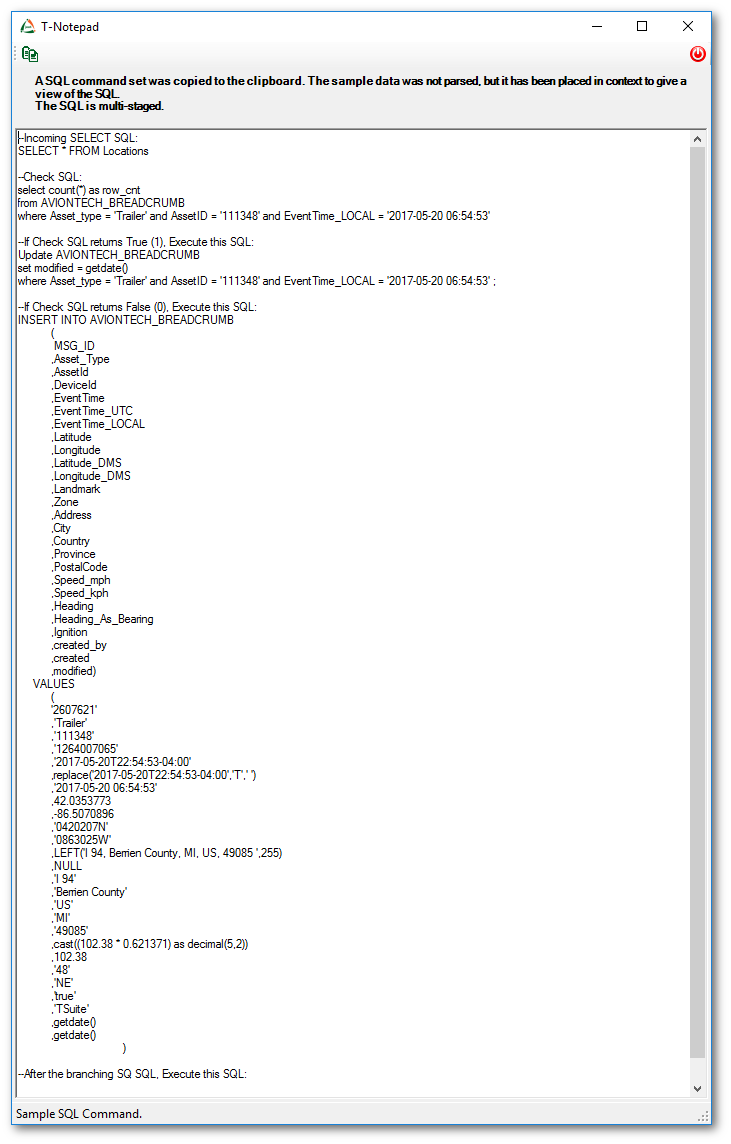

Open Test of SQL

Before going on to the next step, I always recommend performing a review of all the sql statements. A very useful feature of the tLoader editor is the Open Test of SQL. Clicking this button will take a sample row of the source information and translate it into all the sql statements used. You can do a review from with the tNotepad or you can cut and paste from tNotepad to any SQL user interface for debugging and testing. This can save the user a great deal of time in fine tuning the sql statements and the mapping between source and destination.

--Incoming SELECT SQL: SELECT * FROM Locations --Check SQL: select count(*) as row_cnt from AVIONTECH_BREADCRUMB where Asset_type = 'Trailer' and AssetID = '111348' and EventTime_LOCAL = '2017-05-20 06:54:53' --If Check SQL returns True (1), Execute this SQL: Update AVIONTECH_BREADCRUMB set modified = getdate() where Asset_type = 'Trailer' and AssetID = '111348' and EventTime_LOCAL = '2017-05-20 06:54:53' ; --If Check SQL returns False (0), Execute this SQL: INSERT INTO AVIONTECH_BREADCRUMB ( MSG_ID ,Asset_Type ,AssetId ,DeviceId ,EventTime ,EventTime_UTC ,EventTime_LOCAL ,Latitude ,Longitude ,Latitude_DMS ,Longitude_DMS ,Landmark ,Zone ,Address ,City ,Country ,Province ,PostalCode ,Speed_mph ,Speed_kph ,Heading ,Heading_As_Bearing ,Ignition ,created_by ,created ,modified) VALUES ( '2607621' ,'Trailer' ,'111348' ,'1264007065' ,'2017-05-20T22:54:53-04:00' ,replace('2017-05-20T22:54:53-04:00','T',' ') ,'2017-05-20 06:54:53' ,42.0353773 ,-86.5070896 ,'0420207N' ,'0863025W' ,LEFT('I 94, Berrien County, MI, US, 49085 ',255) ,NULL ,'I 94' ,'Berrien County' ,'US' ,'MI' ,'49085' ,cast((102.38 * 0.621371) as decimal(5,2)) ,102.38 ,'48' ,'NE' ,'true' ,'TSuite' ,getdate() ,getdate() ) --After the branching SQ SQL, Execute this SQL:

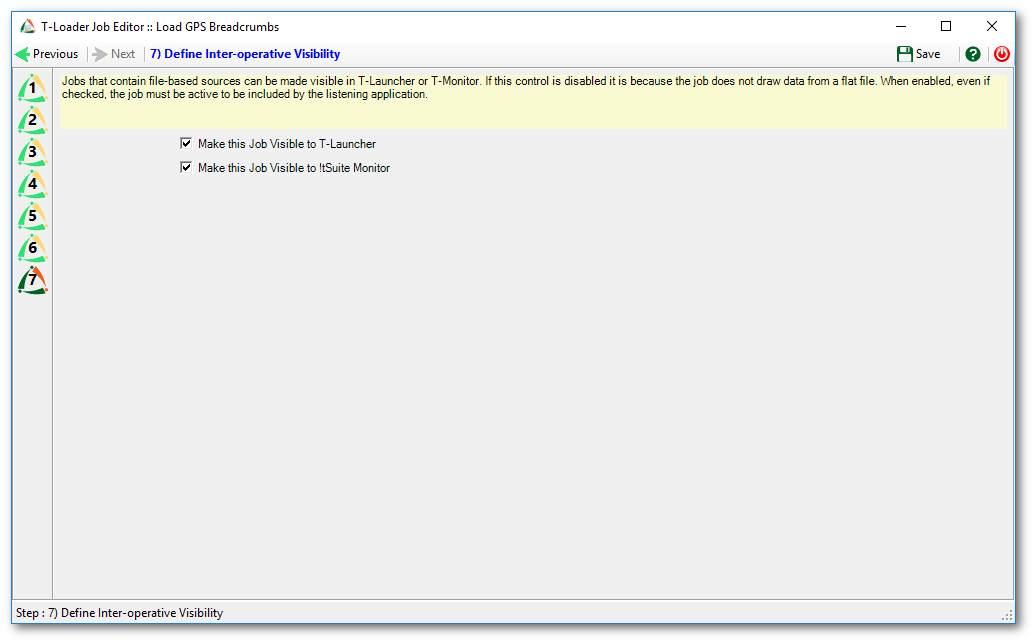

Step 7: Define Inter-operative Visibility

For this example we will be checking off both “Make This Job visible to T-Launcher” and “Make This Job Visible to !tSuite Monitor”.

Final step

Press the save button to save the job.

Now when the !tGateway connector reads the from the web service, it will place a file on a local folder. It will then be processed by tMonitor to be handed over to tLoader for execution.

Based on what gets read, will determine what is updated and what is rejected and what is inserted.

Sample Input

<ArionTrak> <Locations> <Address>PA 507</Address> <AssetId>1143</AssetId> <City>Monroe County</City> <Country>US</Country> <DeviceId>1761028021</DeviceId> <EventTime>2017-05-21T05:11:04-04:00</EventTime> <Heading>313</Heading> <Id>2609052</Id> <Ignition>true</Ignition> <Latitude>41.2331563</Latitude> <Longitude>-75.495779</Longitude> <PostalCode>18424</PostalCode> <Province>PA</Province> <Speed>0</Speed> <EventTime_Local>2017-05-21 01:11:04</EventTime_Local> <Latitude_DMS>0411359N</Latitude_DMS> <Longitude_DMS>0752944W</Longitude_DMS> <Heading_As_Bearing>NW</Heading_As_Bearing> <Asset_Type>Trailer</Asset_Type> </Locations> <Locations> <AssetId>1128</AssetId> <City>Battle Creek</City> <Country>US</Country> <DeviceId>1761028688</DeviceId> <EventTime>2017-05-21T05:11:19-04:00</EventTime> <Heading>355</Heading> <Id>2609053</Id> <Ignition>true</Ignition> <Latitude>42.2640566</Latitude> <Longitude>-85.2201203</Longitude> <PostalCode>49015</PostalCode> <Province>MI</Province> <Speed>0</Speed> <EventTime_Local>2017-05-21 01:11:19</EventTime_Local> <Latitude_DMS>0421550N</Latitude_DMS> <Longitude_DMS>0851312W</Longitude_DMS> <Heading_As_Bearing>N</Heading_As_Bearing> <Asset_Type>Trailer</Asset_Type> </Locations> </ArionTrak>

Sample Output

AT_ID MSG_ID Asset_Type AssetId DeviceId EventTime EventTime_UTC EventTime_LOCAL Latitude Longitude Latitude_DMS Longitude_DMS Landmark Zone Address City Country Province PostalCode Speed_mph Speed_kph Heading Heading_As_Bearing Ignition created_by created modified ----- ------- ---------- ------- ---------- ------------------- ------------------- ------------------- --------- ---------- ------------ ------------- -------- ------ ------- ------------- ------- -------- ---------- --------- --------- ------- ------------------ -------- ---------- ------------------- ------------------- 1284 2609052 Trailer 1143 1761028021 2017-05-21T05:11:04 2017-05-21 05:11:04 2017-05-21 01:11:04 41.233156 -75.495779 0411359N 0752944W (null) (null) PA 507 Monroe County US PA 18424 0.00 0.00 313 NW true TSuite 2017-05-21 01:16:04 2017-05-21 01:16:04 1285 2609053 Trailer 1128 1761028688 2017-05-21T05:11:19 2017-05-21 05:11:19 2017-05-21 01:11:19 42.264057 -85.220120 0421550N 0851312W (null) (null) Battle Creek US MI 49015 0.00 0.00 355 N true TSuite 2017-05-21 01:16:04 2017-05-21 01:16:04